Extract Website Data to Excel: Easy Steps

In today's data-driven world, the ability to gather, analyze, and organize information efficiently is paramount for businesses, researchers, and enthusiasts alike. One of the most common tasks is to extract data from websites and import it into a format like Microsoft Excel for further processing and analysis. In this post, we'll guide you through an easy, step-by-step process to extract website data into Excel using web scraping tools.

Understand Your Needs

Before diving into the technicalities, you must understand what data you want to extract. Websites can offer a wealth of information, but not all will be relevant for your purposes. Here are some steps to define your data requirements:

- Identify the type of data: Are you looking for text, images, links, or structured data?

- Scope: Determine the breadth of your extraction. Will it be from one page, multiple pages, or an entire site?

- Frequency: Do you need this data once or would you want it regularly updated?

Choosing the Right Tool

There are several tools available for web scraping:

- Web Scrapers: Tools like Octoparse or ParseHub simplify the process through visual interfaces.

- Browsers Extensions: Data Miner, Instant Data Scraper for Chrome, among others, provide an easy way to extract data.

- Programming: If you have the knowledge, coding in Python or JavaScript with libraries like BeautifulSoup or Puppeteer can be highly customizable.

🚀 Note: Remember, for extensive data extraction, you might need to consider server-side solutions or script automation, especially if dealing with dynamic content.

Step-by-Step Extraction Process

Step 1: Plan Your Extraction Strategy

- List out the data fields you need to extract.

- Understand the website structure. Does it have dynamic content or APIs that could ease your extraction?

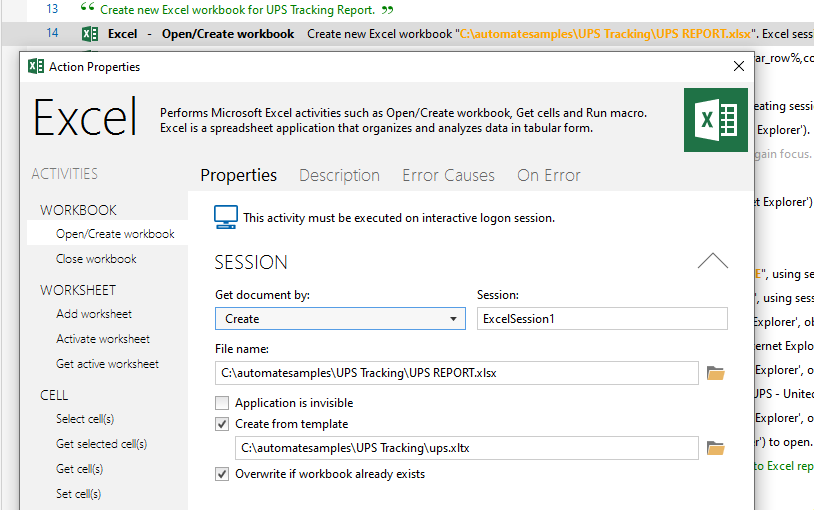

Step 2: Set Up Your Environment

- Install your chosen tool or extension.

- If you're programming, set up your IDE and necessary libraries.

Step 3: Extract the Data

- Open the website in your browser.

- Use your scraping tool to highlight, click on, or select the data elements.

- If programming, write your code to target the HTML elements.

Step 4: Data Cleanup and Validation

- Ensure the data is clean and free of unnecessary HTML tags.

- Validate the data for completeness and accuracy.

Step 5: Export to Excel

- Use your tool's export function to convert data to CSV or Excel format.

- If you have control over the process, consider scripting to automate this part.

| Tool | Use Case | Complexity |

|---|---|---|

| Web Scrapers (Octoparse) | Complex, large-scale scraping with no coding knowledge required. | Low to Medium |

| Browser Extensions (Data Miner) | Quick extraction from static pages or single-page applications. | Low |

| Programming (BeautifulSoup) | Custom, large-scale extractions with complex logic. | High |

🛠 Note: The complexity of the website and the level of detail you need from the data will influence your choice of tool.

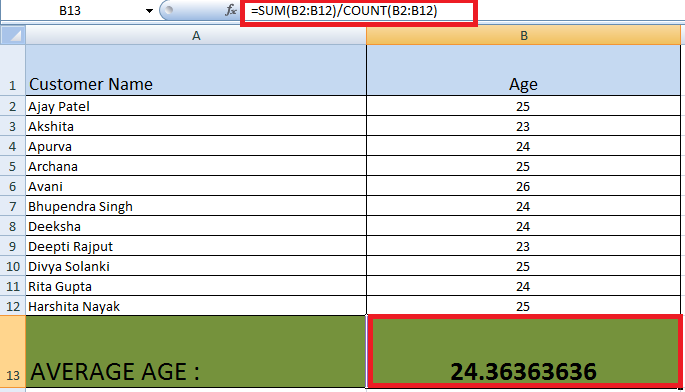

Step 6: Analyze and Use Your Data

- Now that you have your data in Excel, start your analysis, visualization, or whatever process your task demands.

The ability to extract data from websites into Excel opens up endless possibilities for data analysis, market research, competitive intelligence, and more. The steps above provide a robust yet straightforward guide to begin with. Remember, each website might require its unique approach due to different structures and loading mechanisms.

Remember, not all websites permit scraping, and it's important to respect their terms of service. If you're dealing with large volumes of data, ensure you're not overwhelming the server, and consider legal and ethical guidelines regarding web scraping.

What tools can I use for web scraping?

+Popular tools include Octoparse, ParseHub, Data Miner, and programmatic approaches like BeautifulSoup in Python or Puppeteer in JavaScript.

Is it legal to scrape data from any website?

+Not always. Check the website’s terms of service. Always scrape ethically and considerately to avoid legal issues.

How can I handle dynamic websites with JavaScript?

+Tools like Puppeteer or tools with visual web scrapers that render JavaScript can handle dynamic content effectively.

Related Terms:

- Get data from Web Excel

- Easy web data Scraper

- Get data from table Excel

- Export HTML table to Excel

- Web extractor

- how to export website excel