5 Simple Steps to Scrape Website Data into Excel

The ability to extract and analyze data from websites has become indispensable in numerous industries, from marketing to competitive analysis. Web scraping into Excel allows you to gather information quickly, automate data collection, and organize it for easy analysis. Here, we'll guide you through five simple steps to scrape website data into Excel, making your data analysis process smoother and more effective.

Step 1: Identify the Data You Need

Before you begin scraping, you need to:

- Clearly define what data you’re interested in collecting.

- Determine the specific website(s) or pages where this data resides.

- Examine the structure of the webpage to understand where and how the data is presented.

Some tips include:

- Check for any anti-scraping measures or legal restrictions on the site you want to scrape.

- Identify unique identifiers or markers that signal the presence of your desired data on the page.

Step 2: Choose the Right Tool for Scraping

Selecting the appropriate scraping tool is crucial for efficient data extraction:

- Browser Extensions: Tools like Web Scraper for Chrome can help you get started with a user-friendly interface.

- Programming Languages: Python with libraries like BeautifulSoup or Scrapy are powerful for complex scraping tasks.

- Cloud-Based Services: For those who prefer not to delve into coding, platforms like ParseHub or Octoparse offer cloud-based scraping with visual setup.

📝 Note: Always respect website terms of service and robots.txt file to avoid legal issues.

Step 3: Set Up Your Scraping Environment

Here’s how to get your environment ready:

- Install the necessary software or browser extension.

- Set up a virtual environment if using Python, to manage dependencies.

- Configure your scraping tool:

- Define selectors or rules for extracting data.

- Set up how to handle dynamic content (like JavaScript-generated data).

Example:

import requests from bs4 import BeautifulSoup

url = “https://example.com” response = requests.get(url) soup = BeautifulSoup(response.text, “html.parser”)

Step 4: Execute the Scraping Process

Once your environment is set:

- Run your scraper on the target site to extract the data.

- Verify the scraped data for accuracy and completeness:

- Check for any missing or incorrect data.

- Adjust your selectors or rules if necessary.

- Scrape the data in batches if dealing with large volumes.

Example of Extracting Data:

| Data Type | Python Code Snippet |

|---|---|

| Extracting Text from a Div |

|

| Extracting Links |

|

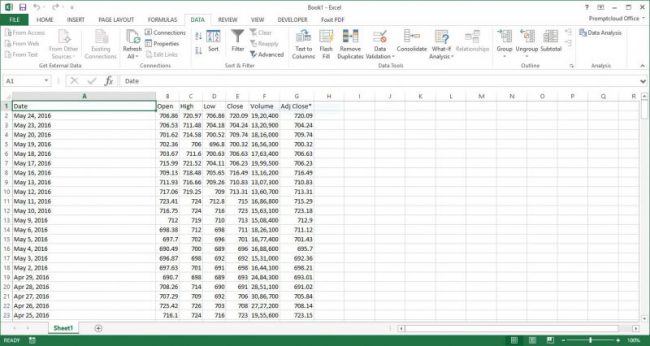

Step 5: Export Data to Excel

After collecting the data:

- Export or save your data in a format compatible with Excel:

- Pandas DataFrame for Python can easily export to Excel:

import pandas as pd

df = pd.DataFrame(data)

df.to_excel(“output.xlsx”, index=False)

- The data is formatted correctly.

- You can apply any necessary data manipulation or cleaning in Excel.

💡 Note: For large datasets, consider using chunking in Python or a CSV format for exporting to avoid memory issues.

By following these steps, you've not only learned how to scrape website data but also how to organize it in Excel for further analysis. This process allows for systematic data collection, enabling you to make data-driven decisions in your projects or analyses.

Is web scraping legal?

+Web scraping can be legal if you respect website terms of service, robots.txt files, and do not overload servers. Some jurisdictions have laws regarding web scraping, and unauthorized access to data might be illegal.

What are the best tools for beginners to start web scraping?

+For beginners, browser extensions like Web Scraper for Chrome or cloud-based services like ParseHub and Octoparse offer a gentle learning curve. For those interested in coding, Python with BeautifulSoup is an accessible option.

How can I prevent getting my IP blocked when scraping?

+To avoid IP blocking:

- Use proxies to rotate IP addresses.

- Implement time delays or “smarter” crawling patterns.

- Check and respect the site’s robots.txt file.

Related Terms:

- Get data from Web Excel

- Web scraping

- Data Scraper

- Import HTML to Excel

- Easy web data Scraper

- Scraping test